TL;DR:

- Use

setImmediate - Delegate to a child process

- Delegate to a worker thread

What is a CPU-bound Task?

In computer science, a task, job or process is said to be CPU-bound (or compute-bound) when the time it takes for it to complete is determined mainly by the speed of the central processor.

CPU-bound jobs will spend most of their execution time on actual computation (“number crunching”) as opposed to communicating with and waiting for peripherals such as network or storage devices (which would make them I/O bound instead).

A thread executing this function,

function foo() {

console.log('Start')

for (let i = 0; i < Number.MAX_SAFE_INTEGER; i++) {

}

console.log('End')

}

Is CPU-bound. On a typical server, it takes a long time to finish.

Why are CPU-bound tasks difficult in Node.js?

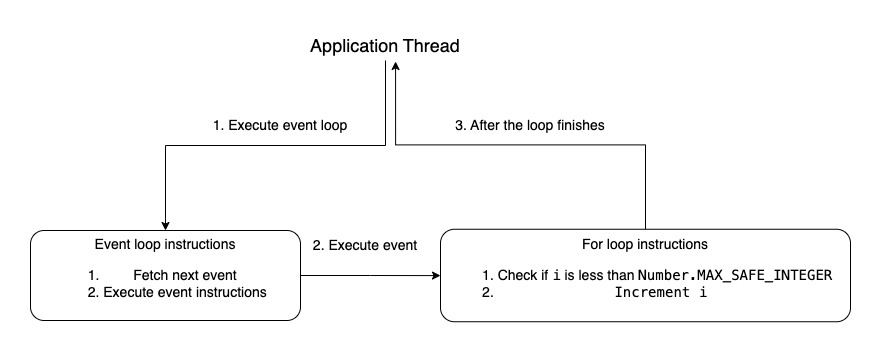

Node has an event driven architecture. Each event is a set of instructions. The application thread picks an event, executes its instructions and then moves on to the next event in the queue.

For I/O bound events, the thread issues an I/O request and moves on to the next event, handling the I/O response later as another event in the queue.

However, for CPU-bound events, the thread must fully execute the instructions of the event before moving on. Since CPU-bound events take a long time to finish, events get piled up in the queue. The event loop is said to be blocked.

Problem Scenario

To illustrate solutions, let’s create a server in Node.js that:

- Accepts a request on path

/run - Executes the CPU-bound function

foo - Returns an HTTP 200

Problems with the Naive Implementation

Consider a straightforward solution,

const http = require('http')

http.createServer((req, res) => {

if (req.url === '/run') {

foo()

res.end()

}

})

.listen(3000, () => console.log('Listening'))

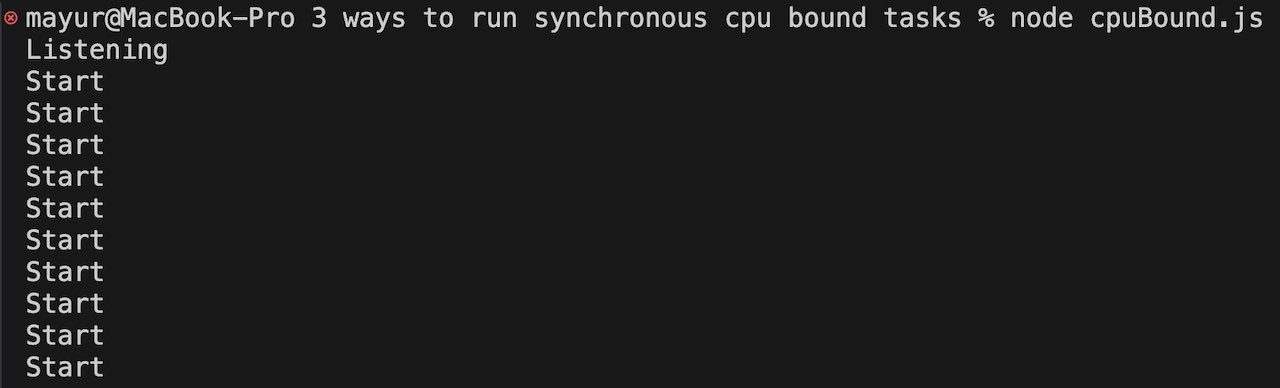

Running 10 parallel curl requests, seq 1 10 | xargs -n1 -P10 curl localhost:3000/run will show that only one request is processed at a time, logging a single “Start” and blocking the remaining requests. In other words, requests are being processed sequentially.

It’s easy to see why this happens. The thread is busy executing the instructions of the for loop of the first request and can process other events (for the other 9 requests) in the queue only after the for loop has finished.

What we need is a way to process all requests in parallel.

Solution 1: Interleaving with setImmediate

setImmediate() is a function that allows scheduling of code execution at some point in the future. It accepts a function to call and a list of arguments to pass into the called function.

Despite its name, execution of callbacks passed to the setImmediate function are deferred and queued behind any I/O event that is already in the queue.

How can we use this function to unblock the event loop?

Usually, a CPU-bound algorithm is built upon a set of steps, it can be a set of recursive invocations, a loop or any combination of those. So, a simple solution to our problem would be to give back the control to the event loop after each one of these steps completes (or after a certain number of them).

This way, any pending I/O can still be processed by the event loop in those intervals where the long-running algorithm yields the CPU. A simple way to achieve this is to schedule the next step of the algorithm to run after any pending I/O requests. This sounds like the perfect use case for the setImmediate() function

const stepSize = 10000; // Number of loops to execute before giving back control to the event loop

function fooWithSetImmediate(start, onFinish) {

start === 0 && console.log('Start')

for (var i = start; i < Number.MAX_SAFE_INTEGER && i < start + stepSize; i++) {

}

if (i === Number.MAX_SAFE_INTEGER) {

console.log('End')

return onFinish()

}

setImmediate(fooWithSetImmediate, start + stepSize, onFinish) // Schedule the next set of loops at the end of the event queue

}

Here, the for loop executes a certain number of times and then the control is given back to the event loop to execute any pending I/O operations.

Try executing 10 parallel curl requests, seq 1 10 | xargs -n1 -P10 curl localhost:3000/run. You’ll notice that the server accepts all requests, logs “Start” 10 times, and begins executing the for loop for each request.

Solution 2: Use multiple processes

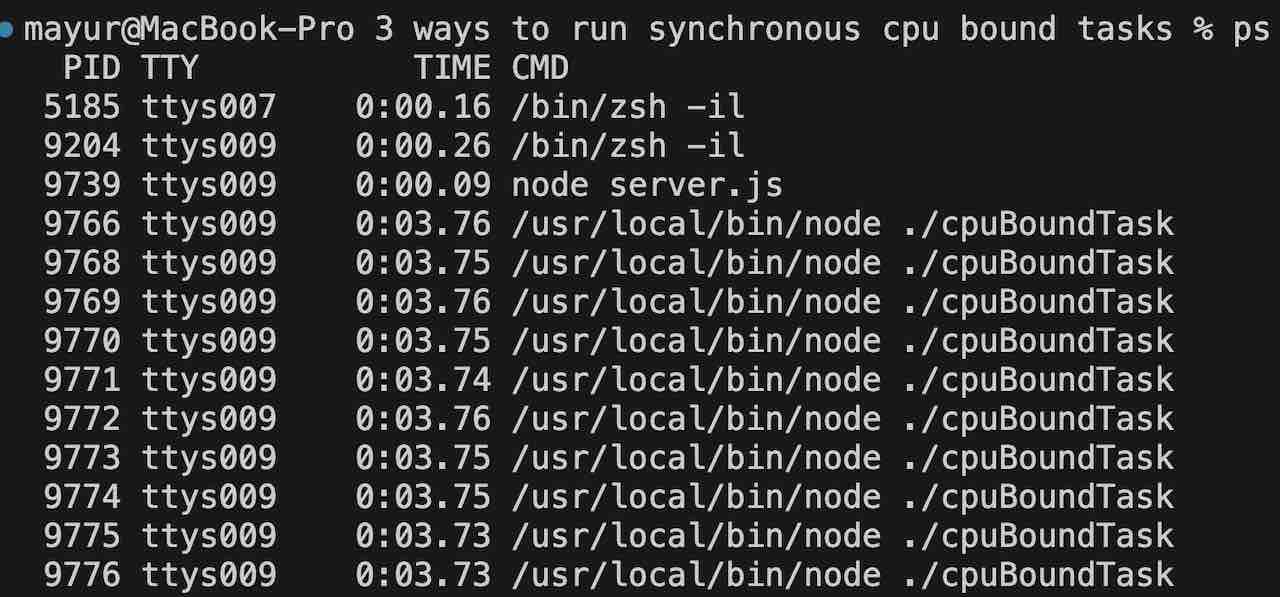

Delegate the task of running CPU-bound tasks to a different process using the child_process module. For each request, the application thread spawns a process or picks an idle one from the process pool. Once the task is complete, the child process notifies the parent via an event.

Child process – cpuBoundTask.js

process.on('message', () => {

console.log('Start')

for (let i = 0; i < Number.MAX_SAFE_INTEGER; i++) {}

console.log('End')

process.send('Done') // Send message to parent

})

server.js

const http = require('http')

const { fork } = require('child_process')

http.createServer((req, res) => {

if (req.url === '/run') {

const child = fork('./cpuBoundTask') // Fork a new process

child.on('message', () => {

res.end()

})

child.send('Start') // Start its execution

}

})

.listen(3000, () => console.log('Listening'))

Run 10 parallel requests. You’ll notice 10 “Start“s logged and 10 new processes created.

Solution 3: Using worker threads

Similar to the previous solution, delegate CPU-bound tasks to separate threads using the worker_threads module. Threads are less resource intensive than processes.

const http = require('http')

const { Worker, isMainThread, parentPort } = require('worker_threads');

if (isMainThread) {

http.createServer((req, res) => {

if (req.url === '/run') {

const worker = new Worker(__filename) // Load the current file into the worker

worker.on('message', () => {

res.end()

})

}

})

.listen(3000, () => console.log('Listening'))

}

else { // Worker thread

console.log('Start')

for (let i = 0; i < Number.MAX_SAFE_INTEGER; i++) { }

console.log('End')

parentPort?.postMessage('Done') // Send message to parent

}

Conclusion

Managing CPU-bound tasks in Node.js can be challenging due to its single-threaded, event-driven architecture. However, by using setImmediate, child processes, or worker threads, you can prevent the event loop from blocking and ensure your server remains responsive under heavy computational load.

Technology destroys jobs and replaces them with opportunities.

Naval Ravikant

Nice

Good blog

Best Blog